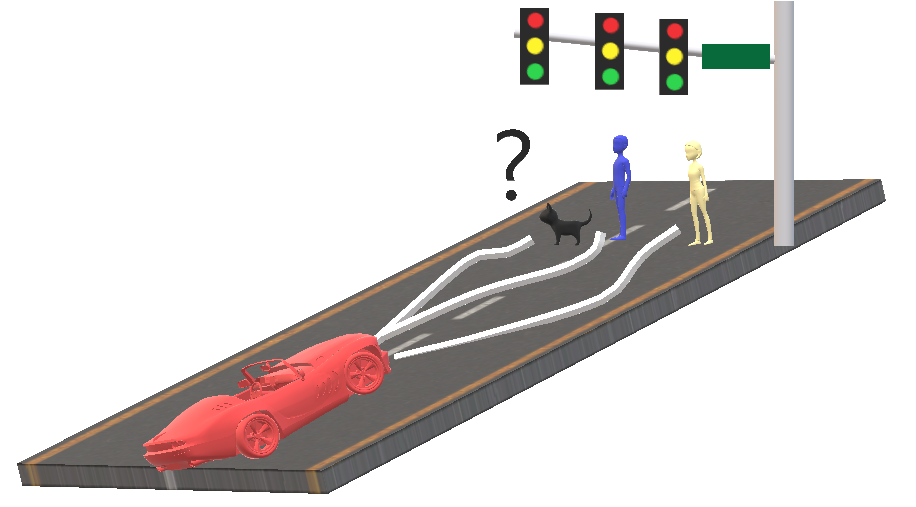

To kill or not to kill ! that is the question , a modification of what Shakespeare says.The self driving cars are machines that do not make decision on their own.Biased , unbiased , fair, unfair , the cars don’t really know (not at least with current advances in AI)

“The literature on self-driving cars and ethics continues to grow. Yet much of it focuses on ethical complexities emerging from an individual vehicle… including ‘‘trolley-type’’ scenarios.” (Borenstein, Herkert & Miller, 2019)

Miller points out the infamous trolley problem.This is one problem that has been in the state of argument for quite sometimes now.What is a Trolley Problem , it is a hypothetical question that says “what will you decide when a trolley is about to hit a child or a old person”.Philippa Foot is the person who came up with this experiment hypothesis way before locomotives became automated to some extent.

Even since Foot’s publication in Oxford Review in 1967, a number of other hypothetical scenarios have been proposed by philosophers and others alike – some of serious nature and some for comedic purposes. Whether serious or comic (or indeed tragic), each thought experiment has a number of similarities.

The main aim of the “Trolley Problem” was to out up ideas forth and answer them to the best with ethical solution .

“The trolley problem is a thought experiment in ethics. The general form of the problem is this:You see a runaway trolley moving toward five tied-up (or otherwise incapacitated) people lying on the tracks. You are standing next to a lever that controls a switch. If you pull the lever, the trolley will be redirected onto a side track, and the five people on the main track will be saved. However, there is a single person lying on the side track. You have two options:

- Do nothing and allow the trolley to kill the five people on the main track.

- Pull the lever, diverting the trolley onto the side track where it will kill one person.

Which is the more ethical option?” (Wikipedia, 2019)

The role of bias and “Trolley”

“Since an action is evil if and only if it harms or tends to harm its patient, evil, understood as the harmful effect that could be suffered by the interacting patient, is properly analysed only in terms of possible corruption, decrease, deprivation or limitation of p’s welfare, where the latter can be defined in terms of the object’s appropriate data structures and methods.”

Luciano Floridy (Artificial Evil and the Foundation of Computer Ethics)

The trolley problem is a typical question that can state the bias and fairness in a problem.Self driving cars are technologies that could make a huge change in the society.These cars can one day takeover the highways roads of every city in the world. To replace the human brain with few microchips , these cars must be “unbiased ” in their decision and make the right choice

A biased driverless car is more dangerous than a crimical driving a car , atleast he would know what to hit what not to hit even in the heat of being chased by a police car.

Thomas Aquinas covered this a long time ago… The trolley problem and similar puzzles are the equivalent of heads I win, tails you lose. It seems a joke, but it is irresponsibly distracting. The solution is not what to do once we are in a tragic situation, but to ensure that we do not fall into one. So the real challenge, in this case, is good design.

BCS(British Computer Society)

Georgia Institute of Technology recently published a paper on trying to find how biased these “state-of the art” technologies are .The study conducted by the institute took up samples and matched them with an algorithm that basically matched the color of someones face to number and feed it to the algorithm for decision making

From the study they had said that dark skinned people are more likely to be hit than a light color skinned person. In-spite of the AI bring a state-of-the art tool and capable to being “unhampered” by human intervention , the bias still arises.

We give evidence that standard models for the task of object detection, trained on standard datasets, appear to exhibit higher precision on lower Fitzpatrick skin types than higher skin types……… We have shown that simple changes during learning (namely, reweighing the terms in the loss function) can partially mitigate this disparity

Predictive Inequity in Object Detection

A study conducted by the says that there are biases in how the self driving cars take decision, not only for the safety of their passengers but also on who it might hit

These bias consider when taking the car on the highway is not safe.What if the car is going through a crowded city of dark skinned people , will the car kill everyone in the way?

These bias raised when the self driving cars are provided with a sample of population where there are little to no representation of the variation in people’s skin color

The state of the art technology like self driving cars must use a 100% accurate machines there must be no setback on compromise that could lead to loss of life and property.

Bias in decision in regards to self driving cars can sometimes be reasoned , to kill 100 to save 1 person or to kill 1 person to save 100 ? Human might decide based on favoritism , but a machine that understands based on past data can it decide without any emotion how does it decide ?

To keep the city safe , if driver less cars were to come in the long run , they might have to sacrifice.But this sacrifices must not be based on bias , but purely on probability .

Like in the movie I,Robot where the robot saves the old guy than the child might seem against human nature , but from the machines perspective it is the only possibility where the machine found the probability on the man living more than the child’s.

“a paradox about self-driving cars … people say they want an autonomous vehicle to protect pedestrians, even if it means sacrificing its passengers — but also that they wouldn’t buy self- driving vehicles programmed to act in this way.”

“No matter their age, gender or country of residence, most people spared humans over pets, and groups of people over individuals.”

Maxmen, 2018

Such probability even if it disregard human nature , it is unbiased to an extent.

Bibliography:

- https://www.vox.com/future-perfect/2019/3/5/18251924/self-driving-car-racial-bias-study-autonomous-vehicle-dark-skin

- https://www.washingtonpost.com/science/2018/10/24/self-driving-cars-will-have-decide-who-should-live-who-should-die-heres-who-humans-would-kill/?noredirect=on&utm_term=.08134d06505a

- https://www.washingtonpost.com/news/innovations/wp/2015/12/29/will-self-driving-cars-ever-solve-the-famous-and-creepy-trolley-problem/?utm_term=.4a18812498fc